The Cadence Problem: Why Great VoC Work Struggles to Drive Change

There's a specific kind of defeat that VoC leaders know well. You've built a comprehensive report. Synthesized thousands of data points into clear themes. Included customer quotes, segment breakdowns, prioritized recommendations. Leadership nods along in the meeting. They thank you for the thorough work.Then nothing changes.I felt this repeatedly while building VoC programs at Asana and Figma. For years, I assumed the problem was execution. Maybe the insights weren't sharp enough. Maybe I needed better data visualization. Maybe I wasn't framing recommendations in the right language.

Recently, Kate Towsey articulated something that reframed the problem entirely: VoC reports "rarely succeed because their intensiveness means delivery tends not to match the audience's decision-making cadence.”

That's it. That's the thing.

The problem isn't the report. It's a structural mismatch between how insights get delivered and how decisions actually get made.

The Mismatch No One Talks About

Think about the typical flow. You spend weeks synthesizing customer feedback into a quarterly report. You present it to leadership. They nod along, thank you for the thorough work, and promise to incorporate the insights into planning.

Then the quarter moves on.

The quarterly VoC report operates on a 90-day cycle while decisions happen continuously. Product planning follows its own rhythm. Leadership attention fluctuates weekly based on fires and priorities. Individual PMs make dozens of micro-decisions between your readouts.

Your report might be perfect. But if it lands when decisions have already been made, or before decisions are ready to happen, the timing alone guarantees limited impact.

I've started calling this the Cadence Problem. And I think it explains why so much excellent VoC work dies on arrival.

The Three Jobs Hiding Inside Every VoC Report

Here's what I've come to believe: the Cadence Problem persists because we're asking a single artifact to do three fundamentally different jobs.

Job 1: Build Awareness Surface what's happening. What are customers saying? What themes are emerging? What's changed since last quarter? This job requires breadth and recency. Leaders need to know the landscape exists.

Job 2: Drive Understanding Go deep on specific issues. What's the use case behind this request? Which customer segments feel this most acutely? What's the context that makes this problem urgent? This job requires depth and nuance.

Job 3: Influence Prioritization Connect insights to decisions. How does this feedback relate to revenue? What's the cost of inaction? Where does this rank against other priorities? This job requires business context and timing alignment.

Most VoC programs attempt all three jobs through a single quarterly report, delivered in a single meeting.

That's an enormous ask.

Think about it from your audience's perspective. In 60 minutes, you're asking them to learn what's happening (awareness), understand why it matters (understanding), and decide what to do about it (prioritization). Each job has different cognitive demands. Each benefits from different cadences. Each requires different preparation from your audience.

When you bundle all three into one artifact, something has to give. Usually, it's the prioritization influence. Leaders absorb the information but defer decisions to "follow up later." Later rarely comes.

Why This Structure Persists

If the bundled approach underperforms, why do organizations keep doing it?

Partly, it's inherited practice. Quarterly business reviews create natural checkpoints. VoC reporting slotted into that rhythm decades ago.

Partly, it's resource constraints. Building one comprehensive report per quarter is already a heavy lift for most teams. Unbundling into multiple workflows sounds like more work.

Partly, it's visibility concerns. The quarterly report is your moment to demonstrate value. If insights flow continuously through other channels, does your work become invisible?

These are real concerns. I don't dismiss them. But I've also watched AI fundamentally change what's possible in the last 18 months. Constraints that shaped program design for years are starting to dissolve.

What AI Now Makes Possible

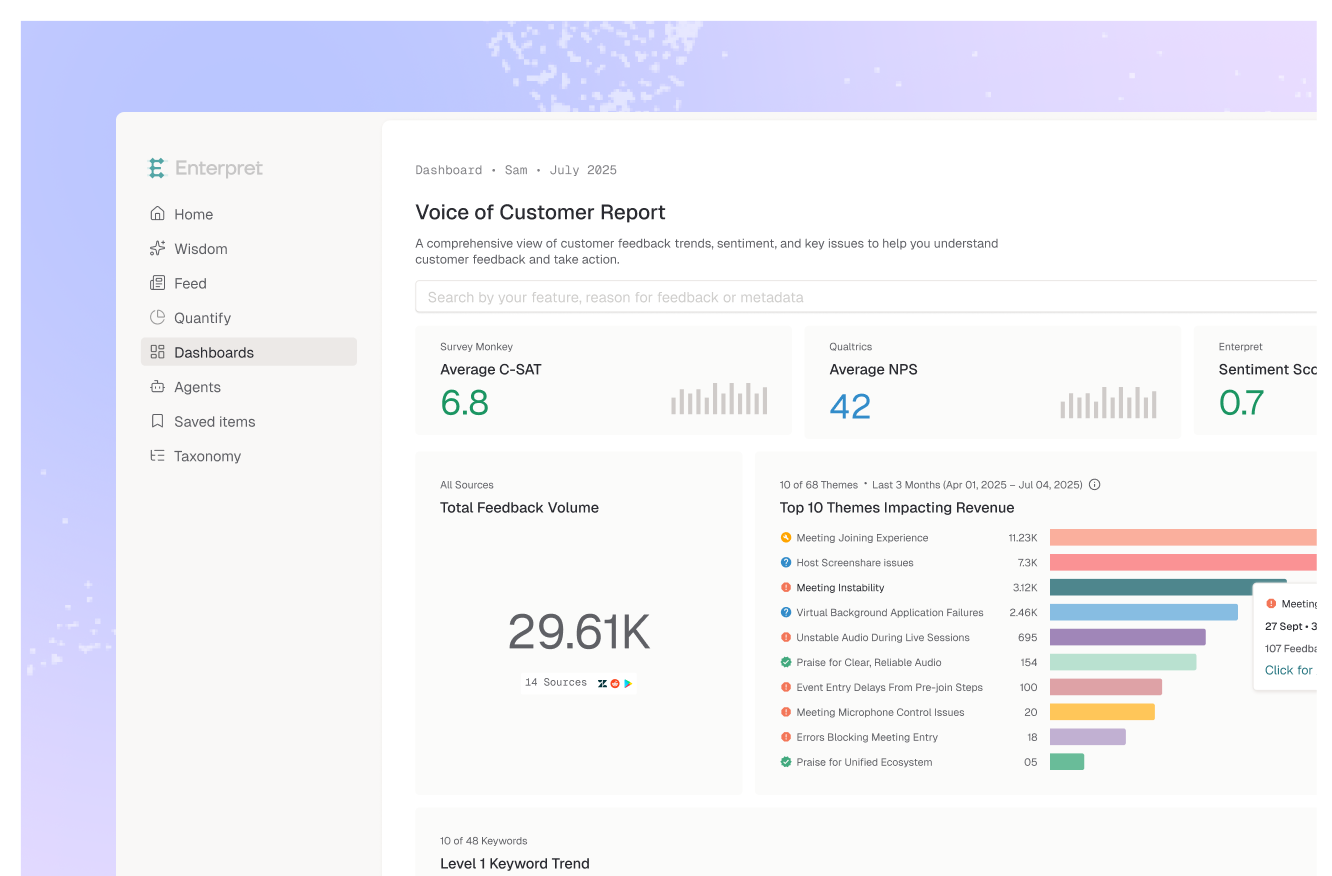

At Enterpret, we've been experimenting with what happens when you deliberately unbundle the three jobs.

For awareness: We intentionally build ambient awareness as a system, not a report. Daily and weekly digests cover broad topics of what customers said recently. Purpose-built agents monitor for anomalies and handle escalation alerts based on emotional intensity and risk levels. Meeting notes and highlights get automatically broadcasted after customer calls. The goal is continuous low-intensity signal that keeps everyone oriented to the customer landscape without requiring dedicated meetings.

The result: when you walk into a quarterly meeting, there's no new information. Leaders already know what customers are saying about their areas. Surprise is eliminated. That's a feature, not a bug.

For understanding: We've reframed this as a shared responsibility rather than something that falls 100% on the report producer. Every employee gets onboarded to Enterpret on their first day. They use Wisdom, our conversational interface, to explore what customers are saying about their area. They dive into citations, read transcripts, watch customer calls. The goal isn't to make them wait for the next report. It's to accelerate their customer intuition so they can pull context when they need it.

For prioritization: With awareness and understanding handled through other channels, meetings transform. Instead of education, they become pure alignment and accountability. Review the same list of top needs. Track what's been addressed. Assign ownership for what hasn't. Measure progress over time.

Recently, I generated a comprehensive customer insights report in 10 minutes. It pulled together customer feedback and product analytics to support a recommendation for changing our core new user onboarding flow. Rather than waiting for the next VoC meeting, we made the decision in a single Slack thread.

That same type of report used to take my team at Asana two months.

The speed is impressive. But I keep coming back to a bigger question: is speed the real unlock, or does it enable entirely different workflows?

The Questions I'm Still Sitting With

I don't want to oversell certainty here. We're early in understanding what the new model looks like. Several questions remain genuinely open:

When does push still beat pull? Ambient awareness systems work for known priorities. But what about emerging issues that leaders don't know to look for? Some signal still needs to be pushed, not just made available.

How do you maintain rigor in continuous systems? Quarterly reports force a quality checkpoint. When insights flow continuously, how do you ensure accuracy and avoid noise fatigue?

What happens to the VoC role? If awareness is automated and understanding is self-serve, what does the VoC leader focus on? I believe the answer is infrastructure design and strategic interpretation. But that's a significant role evolution.

Does this work for all organizations? Ambient systems require baseline data infrastructure and cultural readiness. Not every company is there yet.

An Invitation

Kate's original post asked: "Is anyone using AI to deliver timely, accurate VoC reports?"

The answer is yes. But I think the more interesting question is whether "timely, accurate reports" is still the right frame. AI doesn't just make reports faster. It potentially makes the report itself optional for certain jobs.

I'm not advocating that anyone abandon their current programs. If quarterly reports are working for you, keep running them. But if you're feeling the Cadence Problem, if great work isn't translating to impact, the issue might be structural rather than executional.

We're actively exploring these questions at Enterpret. We don't have all the answers. But we're learning a lot from practitioners who are experimenting alongside us.

If you're rethinking how customer insights flow through your organization, I'd genuinely love to hear what you're trying. What's working? What's failing? Where are you stuck?

The future of this work will be shaped by practitioners in the field, not vendors declaring the answers. Let's figure it out together.