Why Customer Intelligence Requires Infrastructure, Not Just AI

The three paths to customer feedback analysis—and why two of them fail at scale.

Every product leader eventually faces the same question: how do we systematically understand what customers are telling us?

The feedback exists. It's scattered across Zendesk tickets, NPS surveys, G2 reviews, sales call transcripts, Reddit threads, and Slack community channels. The problem isn't access—it's transformation. Turning that noise into decisions.

Today, three paths dominate the conversation:

- Upload CSVs to ChatGPT or Claude and ask questions directly

- Build an internal app using RAG (retrieval-augmented generation) on top of your feedback sources

- Buy purpose-built infrastructure designed for customer intelligence

Path one feels magical the first time you try it. Path two feels like the obvious engineering solution. Path three feels like the vendor pitch you've heard before.

This post explains why the first two systematically fail—and what "purpose-built infrastructure" actually means in practice.

The ChatGPT Trap: Exploration Without Memory

The first time you paste a thousand NPS comments into ChatGPT, the experience is genuinely impressive. Themes emerge in seconds. Summaries feel coherent. You copy the output into a slide and move on.

The problems surface in month two.

You run the same analysis on new data and get different theme labels. "Onboarding friction" becomes "setup challenges" becomes "first-time user experience." The drift is subtle enough to ignore—until someone asks whether onboarding complaints are trending up or down, and you realize there's no consistent baseline to compare against.

ChatGPT and Claude are stateless by design. Every session starts from zero. There's no taxonomy that persists, no definitions that carry forward, no connection between this quarter's analysis and last quarter's. The model doesn't remember that "export timeout" and "download slow" map to the same underlying product area. It doesn't know that complaints from your enterprise segment matter more than complaints from free-trial users. It can't connect a theme to specific accounts, ARR bands, or deal stages.

When feedback influences roadmap priorities or executive conversations, that statelessness becomes a liability. Leaders ask for evidence. You scramble to find the original comments behind the summary. The traceability isn't there because it was never built.

Better prompts don't fix this. The issue isn't the model—it's the absence of structure underneath it.

The RAG Trap: Search Disguised as Analytics

Building an internal solution feels like the obvious next step. You've got embeddings. You've got an LLM. Just pipe feedback into a vector store, retrieve relevant chunks at query time, and let the model synthesize.

This is RAG—retrieval-augmented generation. It works brilliantly for finding examples. "Show me feedback about checkout" returns relevant results. The problem is that finding examples isn't the same as analyzing feedback.

RAG is fundamentally a search architecture. It answers "find things similar to X." It cannot reliably answer "count things matching X" or "group by platform" or "trend over time." Those are database operations, not retrieval operations.

Consider what happens when you ask: "What are the top complaints about our Meetings product?"

A RAG system retrieves the top-k most similar documents to "complaints about Meetings," stuffs them into a context window, and asks the model to summarize. Tomorrow, with different sampling, you get a different answer. The counts shift. The categories change. There's no ground truth because interpretation happens at runtime, not at ingestion.

Now add a follow-up: "Which of those are specific to Android?"

The system re-retrieves. Context from the first query is partially lost. By the third or fourth hop—"Which Android version?" "Which accounts?"—you're essentially starting over. The model is guessing at relationships that were never encoded.

The failure mode is subtle. RAG systems feel like they're answering questions. They produce fluent responses. But fluency isn't accuracy. And when you're making product decisions, the difference matters.

Why Interpretation at Query Time Fails

The deeper issue is that individual feedback is often uninterpretable in isolation.

"don't lag 😅😅😅"

"lag is so annoying"

"slow loading times"

What's lagging? The editor? Export? Playback? AI features? You cannot know from the text alone. The voice of the customer is captured as-is—ambiguous, context-dependent, sometimes contradictory.

RAG approaches try to interpret at query time: retrieve matches, stuff into context, ask the model to categorize. The result is inconsistent because the interpretation is inconsistent. Different samples, different context windows, different conclusions.

The alternative is interpretation at ingestion. Preprocess once, classify against a stable taxonomy, and query structured data forever. The same query tomorrow returns the same result (unless new data arrived). Follow-ups are filters on pre-classified records, not re-retrievals. Trends become calculable because you're comparing apples to apples across time.

Here's what that looks like in practice. Instead of "performance complaints" as an undifferentiated blob, you get:

Now "performance" has meaning. Export is the top issue, not editor load. You can pivot by platform, by segment, by time period—because the structure exists to support those operations.

This requires preprocessing. You cannot aggregate by category or pivot by platform if you're interpreting at query time.

The Taxonomy Problem Nobody Anticipates

Most teams underestimate taxonomy entirely. It seems like a configuration step—create some categories, move on. In practice, it's the core technical challenge.

A useful taxonomy must:

- Reflect your specific product areas and features, not generic categories

- Distinguish bugs from feature requests from complaints from praise

- Support multiple levels of granularity (broad themes AND specific issues)

- Evolve as your product changes without breaking historical analysis

- Map between internal language (your roadmap) and external language (how customers talk)

The depth trade-off is real. Shallow taxonomies (1-2 levels) are easy to maintain but produce generic insights. "Chat has issues" tells you nothing. Deep taxonomies (3+ levels) produce actionable insights but are hard to build and harder to maintain.

And then there's scale. Zoom's outside-in taxonomy—built from public feedback alone—has 19 L1 categories, 95 L2s, 349 L3s, plus 5,011 themes and 11,397 subthemes. That's roughly 17,000 classification nodes. With internal data (support tickets, sales calls, enterprise feedback), a full taxonomy is typically 10x larger.

Predicting against 17,000+ labels isn't a configuration problem. It's a research-grade ML problem. You need high precision (correct tags) AND high recall (don't miss relevant tags) across a label space that's constantly evolving. Traditional multi-class classification fails at this scale.

The Hidden Tax on Engineering

Retool's State of Internal Tools research found that engineers spend an average of 33% of their time building and maintaining internal tools. For companies with 5,000+ employees, that number jumps to 45%.

Exclaimer's 2025 Build vs Buy report found that 71% of in-house IT builds fail to deliver on time or on budget. Researchers call it "The DIY Mirage"—a false sense of control that fades as maintenance demands compound.

Customer intelligence systems are particularly susceptible to this mirage. The prototype is easy. Pipe feedback into a vector store, wire up an LLM, build a simple UI. Two sprints, maybe three.

Then reality sets in.

Each feedback channel requires custom parsing. Support tickets need actor attribution (user's issue ≠ agent's instructions). Forum threads need hierarchy reconstruction. Call transcripts need speaker diarization and long-range context ("that issue" at minute 41 refers to a complaint at minute 3). Surveys need question-answer pairing to interpret ambiguous responses.

Metadata requires normalization. App Store versions, browser versions from support tickets, crash log OS versions—all need mapping to a unified schema.

Taxonomy requires continuous evolution. New features ship. Products rename. Use cases expand. Every change requires backfilling historical data to maintain trend integrity.

Observability requires infrastructure. Detect classification drift. Surface source failures. Alert on silent processing errors.

The question isn't whether your team could build this. The question is whether they should.

Forrester's Software Development Trends Report found that 67% of failed software implementations stem from incorrect build-vs-buy decisions. Most of those failures don't happen at launch. They happen in year two, when the maintenance burden compounds and the original builders have moved on.

What Purpose-Built Infrastructure Actually Means

The phrase "purpose-built platform" gets thrown around loosely. Here's what it means concretely for customer intelligence:

Adaptive Taxonomy: A classification system that learns your product's structure and vocabulary, maintains hierarchical relationships (feedback about "Express Checkout" counts toward both "Checkout" and "Payments"), and evolves as your product evolves—without breaking historical comparisons.

Knowledge Graph: Entity resolution that connects feedback to customers, accounts, segments, deal stages, and revenue. Not just "what are users saying" but "what are our enterprise customers in EMEA saying about features we shipped last quarter."

Preprocessing at Ingestion: Classification happens once, when feedback arrives, against stable definitions. Queries become filters on structured data, not runtime interpretation. The same question tomorrow returns the same answer.

Channel-Native Parsing: Battle-tested extractors for 50+ feedback sources that handle actor attribution, thread hierarchy, speaker diarization, Q&A pairing, and metadata normalization out of the box.

Traceability: Every insight connects to specific customer comments. When a decision is challenged, the evidence is accessible in seconds.

This is the infrastructure RAG approaches eventually have to build anyway—if they want to move from "finding examples" to "making decisions."

The Comparison

The Bottom Line

You have three paths. ChatGPT gives you exploration without memory. RAG gives you search disguised as analytics. Both require you to rebuild the wheel every quarter—or build the preprocessing infrastructure yourself.

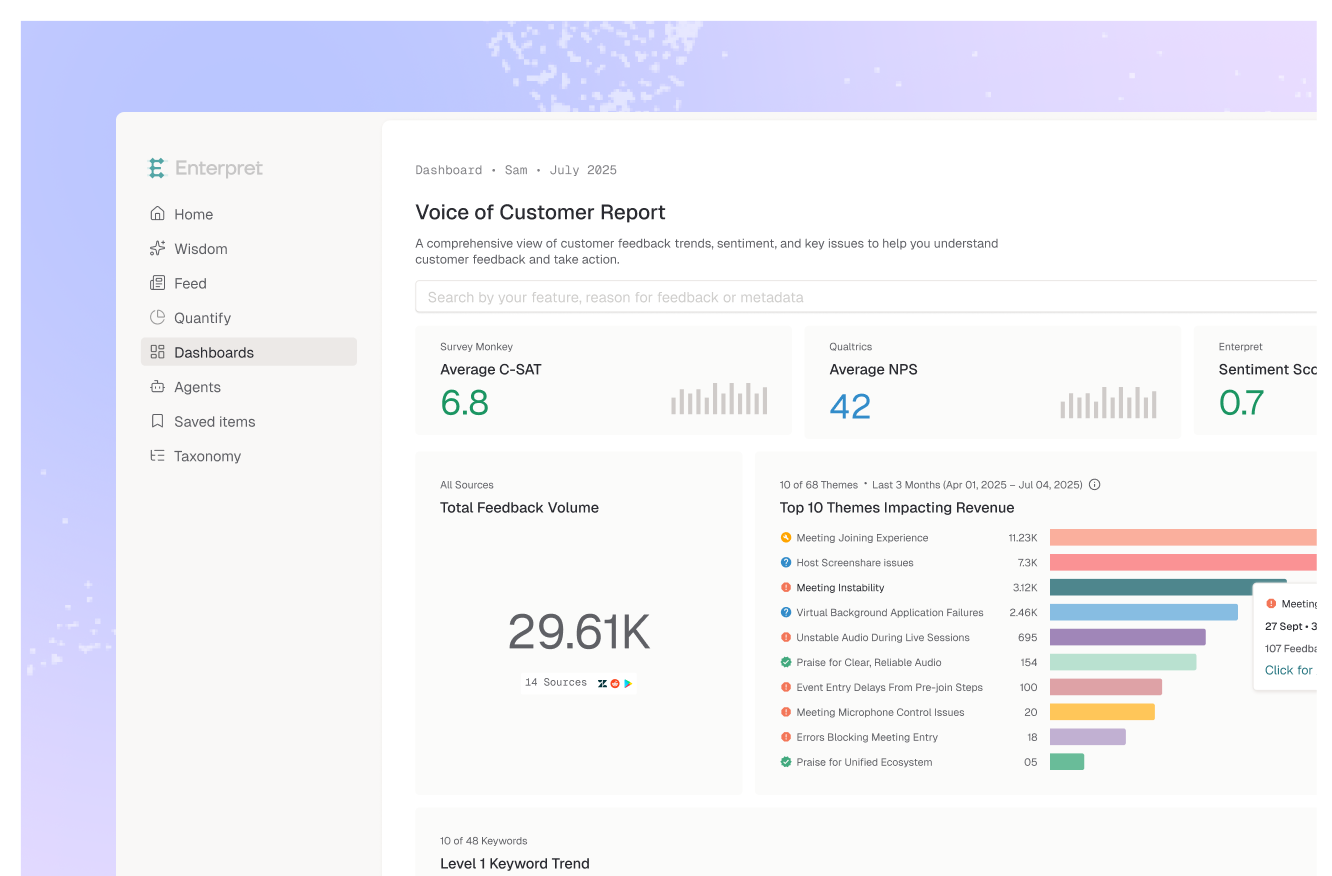

Enterpret is that infrastructure, delivered as a product. Adaptive Taxonomy that evolves with your product. A Knowledge Graph that connects feedback to revenue. Preprocessing that makes every query instant and every trend comparable.

The teams using it—Notion, Canva, Descript, Apollo—aren't debugging integrations or maintaining classification pipelines. They're making decisions.

See how it works → Book a demo